Tenant Data Isolation: Patterns and Anti-Patterns

Explore effective patterns and pitfalls of tenant data isolation in multi-tenant systems to enhance security and compliance.

Jul 30, 2025

Read More

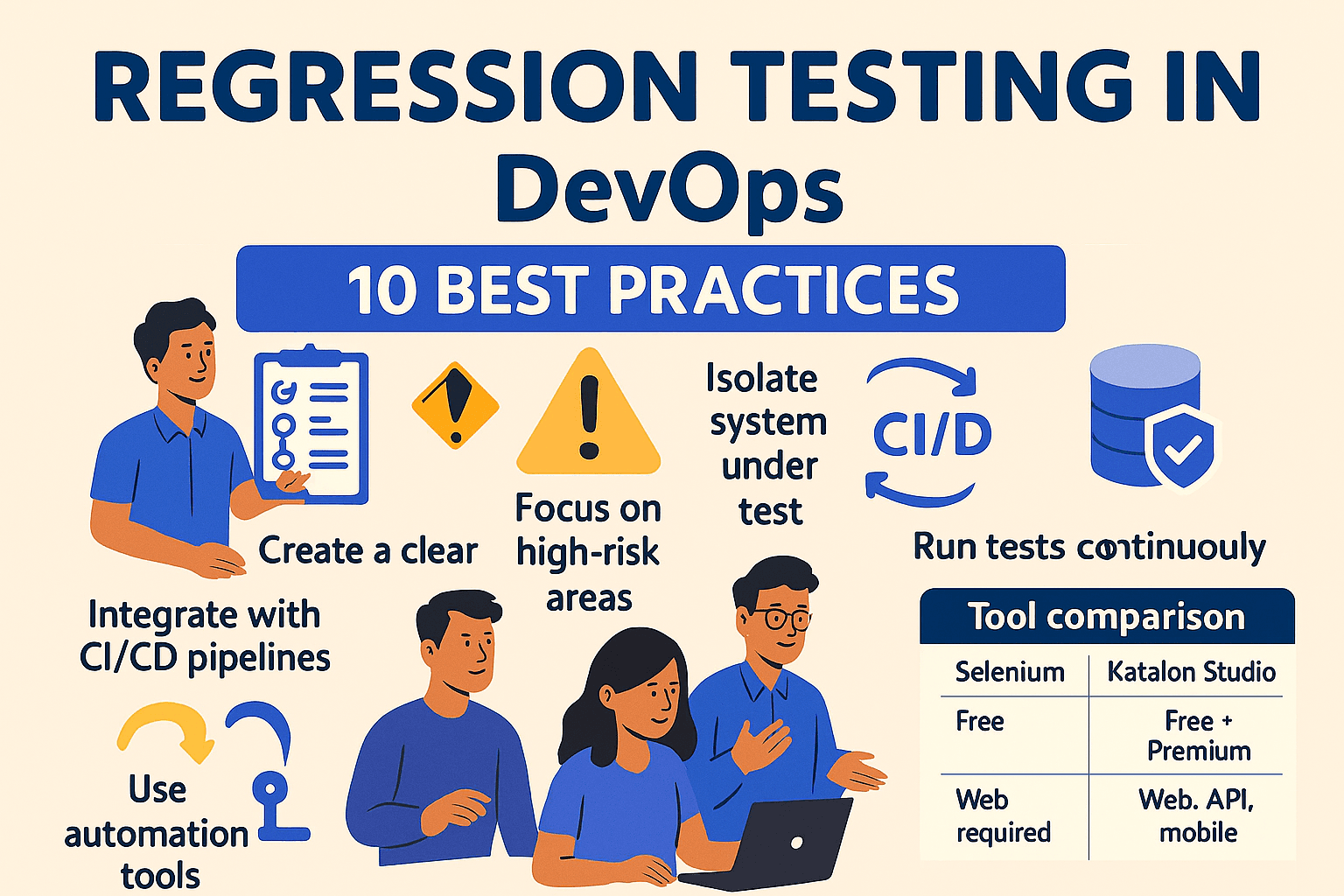

Regression testing ensures that new code changes don’t break existing functionality. In fast-paced DevOps environments, it’s essential for maintaining software stability. Below are 10 best practices to optimize regression testing:

Quick Comparison of Tools:

| Feature | Selenium | Katalon Studio |

|---|---|---|

| Pricing | Free | Free + Premium ($167/mo) |

| Test Types | Web apps | Web, mobile, API, desktop |

| Coding Skills | Required | No-code/Low-code options |

| Setup Complexity | Manual setup | Quick installation |

To deliver stable software quickly, integrate regression tests into CI/CD pipelines, automate where possible, and maintain test relevance by updating them with each release.

In agile DevOps, having a solid regression testing plan is essential. It ensures consistent quality while supporting the fast-paced nature of DevOps deployments. Without a plan, teams often find themselves scrambling to address production bugs, which can lead to higher costs and unnecessary delays.

Start by setting clear, aligned goals for your testing. This means understanding not only what needs to be tested but also why each test is important for your product's success. Every test case should contribute to improving product quality and enhancing the user experience.

An effective regression testing plan includes several key components: defining the scope, maintaining a detailed inventory of test cases, specifying environment requirements, outlining a test data strategy, creating a clear execution plan, establishing a defect logging process, and setting sign-off criteria. Together, these elements help streamline testing and ensure no critical areas are overlooked.

Not all test cases are created equal, so prioritization is crucial. Focus on the most critical functionalities and features that have the highest risk of failure. By categorizing test cases into priority levels, your team can allocate resources more effectively.

| Priority | Definition | Example |

|---|---|---|

| 1: Sanity test cases | Verify basic functionality and ensure core features work before detailed testing begins | For a web-based email app: login, email composition, logout, homepage load, and navigation. |

| 2: Crucial non-core features | Test features that enhance user experience but aren't essential for basic operations | Email attachments, search functionality, inbox filtering options. |

| 3: Lower impact features | Covers features that maintain code quality but don't directly affect core user experience | Inbox appearance customization, external calendar integration, automated out-of-office replies. |

For example, a startup that used Cypress and GitLab CI was able to reduce test execution times by 70%, enabling faster releases.

To measure the effectiveness of your regression testing, track metrics like test pass rates and defect detection rates. Time spent executing the regression suite is another valuable metric to monitor. Clear documentation of testing processes and responsibilities is equally important. Assign accountability to specific stakeholders for each sprint cycle and ensure processes are consistent across different testing scenarios.

Embedding regression tests into your CI/CD pipelines can completely change how your team handles defects. Instead of discovering issues after deployment, you’ll catch them as soon as they’re introduced. This early detection lays the groundwork for greater automation across your pipeline.

When regression tests are automatically triggered, developers receive instant feedback. In fast-paced DevOps environments, where code changes happen multiple times a day, this feedback is invaluable. The quicker you identify problems, the cheaper and easier they are to fix.

Automation is the backbone of effective CI/CD integration. Relying on manual testing simply can’t keep up with the speed of modern DevOps workflows. Automated regression tests run consistently without requiring human input, ensuring that every code change is checked against the application’s existing functionality.

Start by building a robust test suite that prioritizes your application’s most critical features - those whose failure would cause the most disruption. Once those are covered, gradually expand your tests to include secondary features and edge cases. This step-by-step approach strengthens your regression testing strategy, ensuring ongoing validation throughout the development process.

In CI/CD environments, speed matters. Long-running test suites can slow down deployments, creating bottlenecks. To avoid this, use parallel testing to run multiple tests at the same time, cutting down execution time significantly. Additionally, consider risk-based prioritization to ensure that the most critical tests run first.

Continuously monitor test results and address any failures immediately. Ignoring failing tests increases the risk of missing real problems. Integration with version control systems further streamlines this process, making it easier to track and resolve issues.

Connect your regression testing directly to your version control system so tests are triggered automatically whenever code changes are made. Most CI/CD platforms support these automated triggers, making it easy to validate updates in real time.

When it comes to regression testing, not all parts of your application carry the same level of risk. By zeroing in on high-risk areas first, you can make the most of your resources while minimizing potential damage. High-risk defects often lead to significant financial losses, making it critical to address these vulnerabilities early.

Start by assessing the potential impact and likelihood of failure for each component. Prioritize areas that are crucial to your business operations or user experience. For example, payment processing, user authentication, data storage, and core business logic are typically high-priority areas. Pay special attention to components that have undergone recent changes or have a history of issues. Features directly tied to revenue and customer satisfaction - like checkout systems, inventory management, and user account functionalities - should take precedence over purely cosmetic elements.

Use tools like version control systems and bug trackers to gather data that can help you identify these high-risk areas. This data-driven approach ensures your testing efforts are focused where they matter most.

"Quality is never an accident; it is always the result of intelligent effort." - Kavitha Hanuram, LinkedIn Top Voice

Focus your testing on functions where failure would have the most severe consequences. Map out potential failure points to identify areas that could lead to major disruptions. Begin by testing critical, high-risk functionalities before moving on to less risky components. Catching issues early in these areas can save up to 85% in defect-fixing costs.

To streamline your testing process, categorize regression tests based on their importance to your business and technical risk:

Your regression test suite should grow and adapt alongside your application. As your software evolves, so must your tests. Outdated test cases can lead to a false sense of security and waste valuable resources, while missing tests for new features can leave critical gaps in your coverage.

Regularly review and clean up your test cases to eliminate outdated or redundant scenarios. As software changes, some tests become irrelevant - targeting removed features, deprecated APIs, or redesigned functionality. Keeping these in your suite only adds clutter and inefficiency.

Focus on maintaining a lean and effective test suite. Remove obsolete cases, merge overlapping ones to streamline execution, and incorporate new tests to cover updated functionalities or address user-reported issues. By doing so, you’ll ensure your test suite remains aligned with your application’s current state.

Every software update should trigger a corresponding update to your test suite. Changes made by developers require updated tests to maintain full coverage. Clear communication across teams is key to understanding these changes and avoiding overlooked functionality or missed test scenarios.

Leverage impact analysis tools to identify which test cases are affected by recent updates. These tools can help you focus on the areas of your test suite that need immediate attention. Start with functionalities directly impacted by the changes, and then review adjacent areas that might be indirectly affected.

"Each change, whether a bug fix, feature enhancement, or system update, can introduce unforeseen defects. Regression testing identifies these risks, preserving the application's reliability and user experience." - Aronin Ponnappan, Robotics specialist, SGBI Inc

As you update your tests to reflect current functionalities, ensure they remain connected to your business requirements. Traceability between test cases and their corresponding requirements, user stories, or business logic is crucial.

Regression test packs can quickly lose relevance if they aren’t actively maintained. Refining them after every release ensures they accurately reflect the application’s current state. Prioritize test cases based on the importance of features, the potential impact of changes, and the likelihood of defects. This approach ensures that critical functionalities receive adequate attention while less significant areas don’t consume unnecessary resources.

Automated monitoring tools can also help by flagging code changes that affect your existing tests. These tools reduce the burden of manual oversight, ensuring your test suite stays up to date with minimal effort.

Automation can turn regression testing from a tedious and time-consuming task into a smooth process that boosts your DevOps workflows. Currently, only 15–20% of regression testing across the industry is automated. Teams that adopt automation often see faster results, higher accuracy, and broader test coverage. Let’s explore how automation achieves these benefits and fits into modern testing workflows.

Automated tests can validate builds in hours instead of days. This speed is crucial in DevOps, where quick feedback on code changes is essential. Once automated, these test cases run without requiring manual intervention, giving your team more time to focus on strategic priorities. Over time, the efficiency gains add up. Automated tests can run in parallel across multiple environments, allowing you to cover diverse scenarios without extending your testing schedule. This parallel execution ensures that your application is thoroughly validated across different setups in a fraction of the time.

Manual testing, especially for repetitive tasks, can lead to errors and inconsistencies. Automated regression tests remove much of this risk by running the same way every time, producing consistent and reliable results. This reliability is especially valuable for testing complex workflows or edge cases that might be missed during manual testing. By reducing human error, automated testing provides a more dependable way to validate your application's functionality.

When selecting automation tools, focus on those that align with your DevOps needs. Key features to prioritize include cross-platform compatibility, integration with CI/CD pipelines, and detailed reporting that provides actionable insights. Other important considerations are parallel testing capabilities to save time, version control support for tracking script changes, scalability to accommodate future growth, and the ability to replicate real-world testing environments.

Automated tests should be directly integrated into your CI/CD pipeline to catch defects as soon as code is committed.

"CI CD test automation stands as an integral part of modern practices that significantly improve the speed, quality, and reliability of software delivery." - Testlio

In fact, developers using CI/CD tools are at least 15% more likely to be among top-performing teams.

Not every test case is a candidate for automation. Focus on automating stable, frequently run, and high-risk test cases to maximize efficiency. Use data-driven testing to ensure your scripts can handle multiple data sets, which helps expand test coverage without creating extra maintenance work. Regular updates to your test scripts are essential to keep up with application changes. By continuously monitoring test results, you can fine-tune your automation strategy as your application evolves, ensuring it remains effective and efficient over time.

When working in DevOps, isolating the system under test is a game-changer. By keeping your application separate from external dependencies during testing, you can avoid unexpected failures caused by outside factors like APIs, databases, or third-party services. This approach ensures your results are consistent and reliable, giving you a clear view of how your code performs.

Isolation testing offers several benefits. It simplifies workflows, boosts code quality, and makes development more efficient. Most importantly, it allows you to catch bugs early by focusing on how individual modules behave before integrating them. Fixing issues at this stage is faster and less costly. Let’s explore how to effectively isolate dependencies and focus your tests on the behavior of your code.

"The best way to find bugs is to isolate them." - Kent Beck, Software Engineer and Agile Advocate

A major advantage of isolation testing is that it removes hidden local dependencies that can cause headaches during deployment. When tests rely on real external services, they might pass on your development machine but fail in production due to network interruptions, service outages, or configuration mismatches. Isolation testing eliminates these variables, delivering accurate and dependable outcomes.

To isolate your system effectively, mocking and stubbing are your best tools. These techniques allow you to replace external APIs, databases, and services with simulated versions, so your tests don’t rely on actual connections. For instance, if you’re testing a function that processes payments, mocking the payment gateway ensures your tests run smoothly even if the gateway is unavailable.

Here’s an example of replacing real API calls with mocks:

from unittest.mock import patch

import requests

def fetch_data_from_api(url):

response = requests.get(url)

return response.json()

@patch('requests.get')

def test_fetch_data_from_api(mock_get):

mock_get.return_value.json.return_value = {"key": "value"}

data = fetch_data_from_api('https://api.example.com')

assert data == {"key": "value"}

For tests that depend on databases, using in-memory databases is a great way to ensure isolation. These databases operate entirely in memory, preventing interference between tests. SQLite, for example, offers an in-memory option that’s perfect for this purpose:

import sqlite3

def test_database_operations():

# Create an in-memory SQLite database

conn = sqlite3.connect(':memory:')

cursor = conn.cursor()

# Set up schema and data

cursor.execute("CREATE TABLE users (id INTEGER PRIMARY KEY, name TEXT)")

cursor.execute("INSERT INTO users (name) VALUES ('Alice')")

# Test query

cursor.execute("SELECT * FROM users")

result = cursor.fetchone()

assert result == (1, 'Alice')

When testing applications that rely on data, using fake data generators can simulate realistic input scenarios without needing production data. Libraries like Faker make it easy to create test cases with diverse data:

from faker import Faker

fake = Faker()

def test_create_user():

# Generate fake user data

fake_name = fake.name()

fake_email = fake.email()

# Test logic that interacts with database

user = create_user_in_db(fake_name, fake_email)

assert user.name == fake_name

assert user.email == fake_email

By controlling the data and environment, you can focus your tests on the functionality that matters most.

Isolation testing sharpens your focus on individual components, allowing you to evaluate their performance under various conditions. This targeted approach speeds up regression tests and provides clearer feedback, helping you pinpoint exactly what’s broken. By eliminating external service issues from the equation, you gain confidence in your results and reduce unnecessary troubleshooting.

Waiting until the end of development to run regression tests is like checking your car’s brakes only after a long, high-speed drive - by then, any issues are harder and costlier to fix. Instead, running regression tests throughout the development process turns testing into a continuous quality assurance effort.

The "shift-left" testing approach focuses on catching bugs early in the software development lifecycle. This is especially important in DevOps, where frequent code changes can lead to unexpected issues, even from minor updates.

Catching bugs early means less rework and lower costs. When issues are identified during development, they can be fixed immediately while the code is still fresh in the developer's mind. Waiting until later, after multiple layers of changes, often requires more time to investigate and resolve. Think of it as performing regular maintenance to prevent costly breakdowns. This proactive testing approach aligns perfectly with Agile practices, ensuring smoother workflows.

In Agile environments, regression tests should be part of every sprint. Run these tests whenever the codebase changes - whether it’s a new feature, a bug fix, or a significant update. By testing continuously, you can quickly spot and address errors before they grow into larger problems, keeping your project on track and your deployments error-free.

Continuous testing stops small changes from causing big problems. For instance, a tweak to the user authentication function could unexpectedly break the password reset feature. Running tests throughout development helps you pinpoint the exact cause of such issues, making debugging faster and more effective. This approach ensures that a single modification doesn’t spiral into a series of widespread failures.

Automating regression tests is key to getting quick feedback and reducing the chance of regressions affecting end users. By integrating automated tests into your CI/CD pipelines, you can validate every code commit in real time. Focus your automation efforts on high-risk areas and core functionalities to strike a balance between thorough testing and maintaining development speed.

Tests are only as good as their relevance. Regularly update your test cases to reflect changes in the codebase. Outdated tests can create false confidence or waste time on irrelevant scenarios. Keeping automated tests current ensures that they continue to reinforce the quality of every release. When regression tests are continuously maintained and run, they shift from being a bottleneck to becoming a key enabler of faster, more dependable software delivery.

To ensure smooth continuous delivery in DevOps, expanding regression test coverage is crucial. This process helps identify and address potential failure points that might otherwise slip through the cracks. Effective test coverage goes beyond basic functionality, delving into edge cases, integrations, and new features that could behave unexpectedly.

Edge cases often expose hidden vulnerabilities. For example, in Amazon's early days, users could order negative quantities of products, leading to financial losses that could have been avoided with proper input validation. Testing boundary conditions using minimum and maximum values is essential to catch such issues. Additionally, rare but foreseeable events should not be overlooked. Take EA's racing game as an example: it became unplayable on Leap Day 2024 because calendar-based edge cases weren't accounted for.

Integration testing ensures that all components of your system work together seamlessly. This step is especially important for areas like data migration and component interfaces. Pay particular attention to critical business features, as these are often subject to frequent changes. By doing so, you can catch potential ripple effects before they escalate into production issues.

Coverage metrics provide a clear picture of where your testing efforts may be falling short.

"Test coverage reveals blind spots where bugs could hide and gives you data to discuss testing progress with stakeholders." - Rosie Sherry, Ministry of Testing

Projects with over 80% test coverage tend to have 30% fewer bugs than those with less than 50% coverage. Moreover, improving test coverage from 60% to 85% can reduce the average time to fix bugs by 25%.

| Coverage Type | What It Measures | Best Use Case |

|---|---|---|

| Statement Coverage | Lines of code executed during testing | Identifying untested code sections |

| Branch Coverage | Decision points tested for all outcomes | Ensuring logic paths work as intended |

| Requirements Coverage | Features and user scenarios validated | Verifying business requirements |

Automation plays a key role in scaling test coverage effectively. For instance, Sony PlayStation conducts full regression tests nightly, covering main scenarios, boundary conditions, and error paths. They also use tools like Applitools for UI verification across more than 100 languages, transforming functional tests into visual comparison tools.

"Automation is a fantastic way to really, one, expedite your testing and then two, save your productivity because you're no longer retesting the same test case over and over again. You can write one code or one scriptless automation for that test case to keep and retain it and then kick it off when regression comes." - Shannon Lee, Senior Solutions Engineer

AI-powered tools are also gaining traction. These tools can analyze test data, detect patterns, and even generate test cases for overlooked scenarios. Some organizations are experimenting with machine learning to predict which parts of an application are most vulnerable when new code is introduced.

While aiming for comprehensive coverage is important, achieving 100% coverage is rarely feasible. Instead, focus on high-risk areas and critical paths. Involve developers early in the process to identify boundary conditions and prioritize scenarios that pose the greatest risk. Regularly reviewing coverage using visualization tools can help you adapt your testing to match real-world usage patterns and uncover unexpected issues. Expanding test coverage not only reduces the chance of errors slipping through but also reinforces the reliable processes established in earlier testing phases.

Regression testing thrives when development, QA, and operations teams work together seamlessly. By breaking down silos and encouraging collaboration, teams can detect issues faster and deliver higher-quality results.

For DevOps regression testing to succeed, it’s essential to build cross-functional teams. These teams bring together developers, QA testers, and operations staff, all working toward shared objectives. This approach eliminates the inefficiencies of isolated workflows and traditional handoffs. A great example is AWS's "two-pizza teams" model, which emphasizes small, empowered groups that drive innovation and enhance collaboration.

"DevOps is not just about the development and operations teams working together, but it's also about the entire organization adopting a mindset of innovation and collaboration." - Patrick Debois

Structured communication is a game-changer, significantly boosting deployment rates and cutting lead times. Companies that prioritize clear communication see benefits like 20% higher employee engagement and a 30% drop in project errors. Using consistent terminology and tools - whether it’s Gherkin syntax or Test-Driven Development (TDD) - ensures everyone is on the same page.

"In DevOps testing collaboration, adopting a common language and framework is key. Implement universal terms and tools, like Gherkin syntax or TDD, fostering seamless communication between developers, QA, and ops. This aligns understanding, accelerates feedback loops, and bolsters a cohesive testing strategy, enhancing product quality and deployment efficiency." - Sagar More, Resiliency Geek

Modern tools not only improve communication but also enhance project timelines and productivity. For instance, integrating Jira and GitHub with Slack can reduce issue resolution times by 57%. ChatOps platforms, which enable real-time feedback, have been shown to improve deployment frequency by 38%.

| Collaboration Benefit | Impact |

|---|---|

| Faster Project Timelines | 50% quicker completion using collaboration tools |

| Improved Issue Resolution | 57% faster resolution with integrated platforms |

| Higher Deployment Frequency | 38% increase with ChatOps tools |

When developers, QA, and operations teams share responsibility, regression testing becomes more effective. Teams with clearly defined objectives are 60% more likely to achieve better outcomes. By ensuring every team member understands their role in maintaining quality and preventing regressions, collaboration becomes smoother and more productive.

Continuous feedback is essential for maintaining quality and resolving issues quickly. Teams that embrace a culture of feedback are 1.3 times more likely to perform at a high level. Tools that send immediate alerts when regression tests fail help teams tackle problems before they escalate. Regular check-ins during standups or sprint reviews also provide opportunities to fine-tune processes and strengthen collaboration.

Regression testing thrives on data analysis. Teams that adopt automated testing often experience a 40% reduction in testing time and a 30% boost in overall testing coverage. But these gains don’t happen automatically - they rely on actively monitoring results and using insights to refine testing processes. By consistently tracking metrics, teams can identify areas for improvement and build on earlier strategies.

Start by measuring test coverage, aiming for 80–90% in critical areas. This reduces the risk of bugs slipping into production.

Pay attention to the Defect Detection Rate (DDR), which shows how well your tests are catching issues before they impact users. A high DDR means your regression suite is doing its job. On the other hand, the Defect Escape Rate (DER) measures how many bugs make it to production. A high DER is a red flag that your test suite needs strengthening.

Test execution time is another crucial metric. Developers often cite slow tests as a major challenge - 31% of them, to be exact. Monitoring execution speed can help identify bottlenecks that slow down the development process.

Once you’ve defined your metrics, use them to analyze failure patterns. Look at pass/fail rates, track flaky tests (aim to keep these under 5%), and monitor how much effort goes into test maintenance. If specific modules or features frequently fail, it might signal deeper issues like architectural flaws or insufficient test coverage.

Test maintenance effort is another area to watch. While automated testing can reduce testing costs by 60% over time, this only holds true if maintenance remains manageable. If you’re spending too much time fixing broken tests, it may be time to rethink your automation approach.

Modern regression testing tools can simplify tracking by offering automated reporting. These systems provide real-time data with greater accuracy and scalability. Look for tools with real-time dashboards that display metrics like test status, pass/fail rates, defect trends, and coverage. Visual dashboards make it easier for stakeholders to understand progress, while built-in defect tracking speeds up issue resolution.

Use the insights from your testing data to refine your processes. If you notice frequent failures in specific areas, adjust your testing strategy to focus on those weak spots. As your application evolves, retire outdated test cases and add new ones to keep your suite relevant.

Tracking your Automation Return on Investment (ROI) is also important. This metric compares the savings from automation to the costs of implementation and maintenance . It’s a powerful way to demonstrate the value of your testing efforts and secure additional resources for improvements.

Finally, establish shared standards for test components, documentation, and naming conventions to reduce redundancy and improve reusability. Maintain a living document for these standards, updating it as your team discovers more efficient methods. Regular retrospectives can help pinpoint what’s working and what needs adjustment, ensuring your regression testing evolves alongside your development practices. A data-driven approach like this strengthens the continuous integration and testing strategies already in place.

Selecting the right regression testing tool can make or break your DevOps workflow. Two of the most well-known options - Selenium and Katalon Studio - both hold a 4.5/5 rating on G2, but they cater to different needs and team setups. Below is a summary of their key differences, followed by a deeper dive into what sets them apart.

| Feature | Selenium | Katalon Studio |

|---|---|---|

| Pricing | Free (open-source) | Free version + Premium at $167/month for 1 user |

| Programming Languages | Java, C#, Python, Ruby | Groovy and Java |

| Test Types | Web applications | Web, desktop, mobile, and API |

| Coding Requirements | Requires coding skills | No-code, low-code, and full-code modes |

| Setup Complexity | Manual WebDriver and browser driver setup | Single installation package |

| Integrations | TestNG, JUnit, Maven, third-party libraries | Built-in Selenium, Appium, CI/CD pipelines |

| Support | Community forums and resources | Free and paid support plans with ticket system |

Selenium is a go-to tool for teams with strong programming expertise. As an open-source framework, it supports multiple programming languages like Java, C#, Python, and Ruby. This flexibility allows for deep customization, making it ideal for complex testing scenarios. Selenium also integrates smoothly with tools like TestNG, JUnit, and Maven, enabling continuous integration and delivery workflows.

However, Selenium comes with its challenges. Setting it up requires manual configuration of WebDriver and browser drivers, which can be time-consuming. While it's free to use, hidden costs can arise from the need for infrastructure setup, script development, and ongoing maintenance.

Katalon Studio, on the other hand, offers a simpler, more accessible platform. It supports testing across web, mobile, API, and desktop applications, making it a versatile choice for teams handling diverse testing needs. With no-code, low-code, and full-code modes, Katalon caters to teams with varying technical skills. Its single installation package ensures a quick setup process.

While Katalon Studio offers a free version, its premium features start at $167 per month for a single user, with an Enterprise edition available from $69 per user per month. This pricing model can save time and effort on development and maintenance, especially for teams without extensive coding expertise.

Selenium is best suited for teams with strong coding skills that need extensive customization and flexibility. Its ability to integrate with third-party tools and frameworks makes it ideal for complex projects.

Katalon Studio, with its no-code options and rapid setup, is perfect for teams of mixed skill levels working across multiple platforms. Its recognition on Gartner Peer Insights highlights its readiness for enterprise-level projects.

Both tools integrate seamlessly with CI/CD pipelines, making them reliable choices for automated regression testing. Ultimately, your decision should align with your team's expertise, budget, and testing requirements. Whether you prioritize customization (Selenium) or ease of use and comprehensive features (Katalon Studio), there's a solution that fits your needs.

Delivering high-quality software in a fast-paced DevOps environment hinges on a thoughtful mix of automation and human involvement in regression testing. The strategies shared earlier outline a clear path for teams aiming to improve software quality while keeping up with rapid release cycles.

Set clear goals and focus on critical features. Defining precise testing objectives early on pays off. For instance, NCR achieved quicker defect detection by leveraging automated regression testing across their customer journey. Prioritize automating repetitive, stable tests while reserving manual efforts for areas like user interface updates, which often require a human touch.

The impact of these methods is clear. NetForum Cloud at Community Brands boosted their automated test cases by 40% in just a few months, nearly eliminating customer downtime during feature updates. Similarly, Wurl slashed their regression testing time to less than a day, enabling faster product iterations.

Keep test suites comprehensive and adaptable. Automation is most effective when test suites are continuously updated. Removing redundant tests reduces false positives, while adding coverage for new features ensures relevance. Techniques like parallel testing can further speed up execution, and close collaboration between development and testing teams ensures alignment.

Commit to ongoing refinement. Use testing results to fine-tune your process. Regularly reviewing outcomes and making adjustments strengthens your workflow. By embedding these practices, you reinforce the principles discussed throughout this article.

To achieve stable, high-quality software at speed, integrate regression tests into your CI/CD pipeline for immediate feedback, update tests with every change, and promote a strong quality-first mindset. At Propelius Technologies (https://propelius.tech), these approaches are at the heart of every project, ensuring reliable and efficient software delivery.

Integrating regression testing into CI/CD pipelines simplifies software delivery by automating tests, cutting down on manual work, and delivering quicker feedback on code changes. This helps ensure that new updates don’t break existing functionality, keeping the application stable even during fast-paced deployment cycles.

By validating code continuously, teams can identify problems early, roll out updates more quickly, and lower the chances of errors making it to production. This process boosts both the efficiency and reliability of software delivery in DevOps workflows, helping teams maintain high-quality standards without sacrificing speed.

Using test automation tools for regression testing offers several key benefits that enhance both precision and productivity. These tools significantly speed up the testing process, running tests much faster than manual methods. This not only shortens the overall testing cycle but also helps you roll out updates to the market faster. Plus, automation removes the risk of human error during repetitive tasks, ensuring consistent and reliable results.

Another advantage is the ability to handle a wider range of scenarios, leading to more thorough test coverage. This means bugs can be identified earlier in the development process, ultimately saving time and reducing costs. By simplifying workflows and increasing dependability, automation tools help your DevOps team work more efficiently while delivering better returns on investment.

When conducting regression testing, zeroing in on high-risk areas is a smart move. This ensures the most critical and vulnerable parts of your software are carefully examined. By doing so, you can catch potential issues early, minimizing the risk of major disruptions or costly problems after updates or changes.

Focusing on these areas helps maintain system stability, safeguard essential functionalities, and provide a smoother user experience. It’s also a practical way to use resources efficiently while preserving the performance and reliability of your application.

Need an expert team to provide digital solutions for your business?

Book A Free CallDive into a wealth of knowledge with our unique articles and resources. Stay informed about the latest trends and best practices in the tech industry.

View All articlesGet in Touch

Let's Make It Happen

Get Your Free Quote Today!

Get in Touch

Let's Make It Happen

Get Your Free Quote Today!