Tenant Data Isolation: Patterns and Anti-Patterns

Explore effective patterns and pitfalls of tenant data isolation in multi-tenant systems to enhance security and compliance.

Jul 30, 2025

Read More

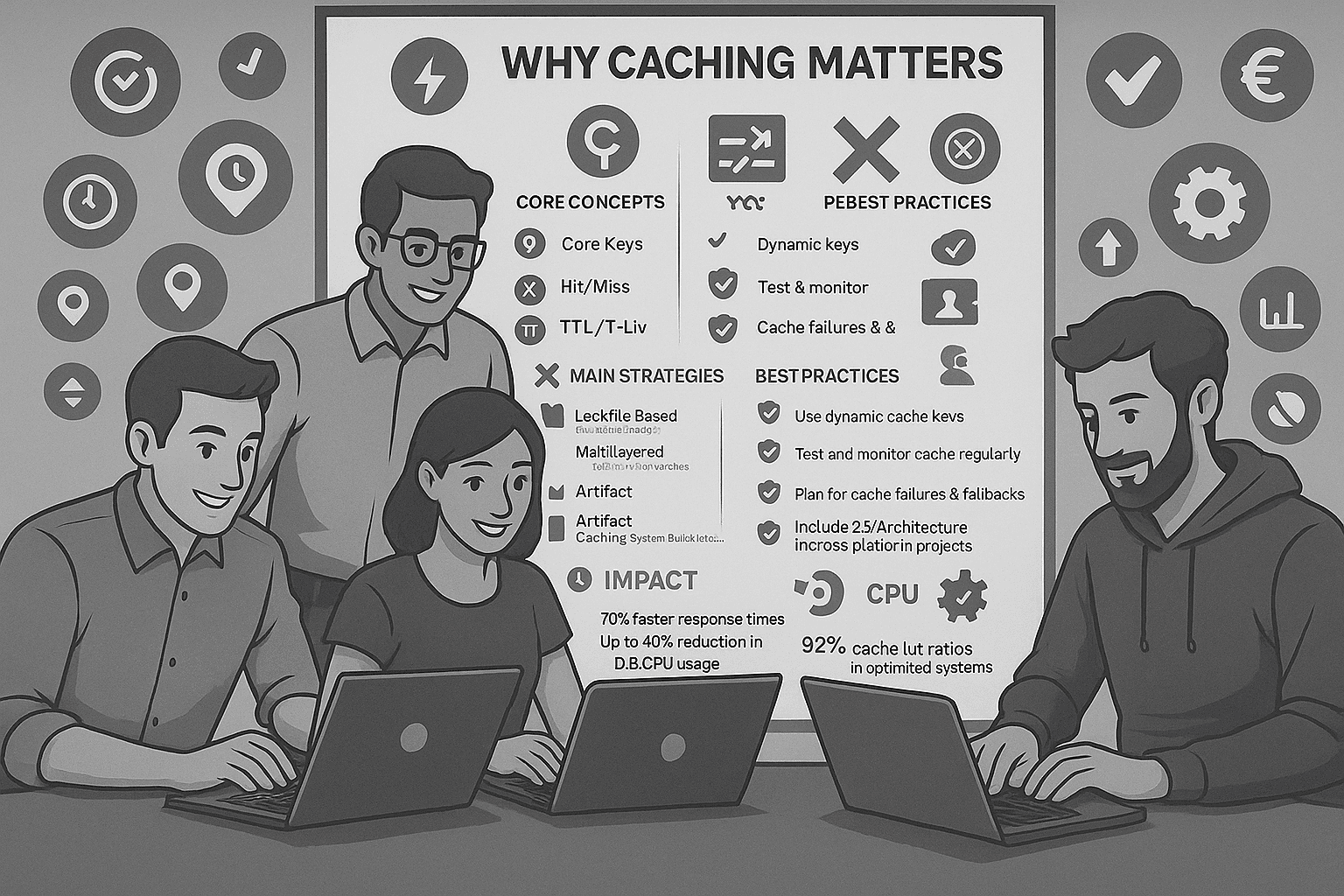

When managing dependencies in software development, caching can save time, reduce costs, and improve build performance. Here's what you need to know:

package-lock.json.Quick Tip: Start simple with caching, then scale based on your project's needs. A well-implemented caching strategy can drastically improve productivity and resource efficiency.

Before diving into specific strategies, it's important to grasp the basics of caching in dependency management. At its core, caching involves storing frequently used dependencies to avoid repeatedly downloading them from the network during build processes. This simple yet effective approach saves time and resources, especially since without caching, every workflow run starts from scratch.

To effectively implement and troubleshoot caching strategies, there are a few fundamental concepts you need to understand. These concepts form the backbone of any efficient caching system.

node-v16-package-lock-hash-abc123.

With these concepts in mind, let’s explore scenarios where caching proves valuable.

Caching is most effective in situations where repetitive dependency fetching slows things down. For example, it's ideal for tasks like development builds, dependency installation, and Docker image builds. By storing and reusing dependencies, you can significantly speed up these processes.

In large-scale projects, caching can make a huge difference. Retrieving data from an in-memory cache is incredibly fast - sub-millisecond, in fact - compared to disk storage. This speed advantage becomes critical when managing hundreds or even thousands of dependencies in complex applications.

CI/CD pipelines are another perfect use case for caching. A single caching instance can handle hundreds of thousands of input/output operations per second (IOPS), which can dramatically improve build performance. This is especially beneficial for teams running multiple builds daily.

Beyond improving speed, caching also reduces infrastructure costs by cutting down on network requests and server load. However, it’s not always the right choice. Avoid caching in scenarios like production releases, security-critical pipelines, or infrastructure deployments where accuracy and reliability are paramount. In such cases, fetching fresh dependencies is a safer approach.

For development teams, a smart strategy is to cache specific versions of dependencies rather than the latest ones. This ensures consistency across builds while still reaping the performance benefits of caching.

Lastly, caching isn't just about individual projects. It can also help applications scale by reducing the strain on backend systems, allowing them to handle more users simultaneously. This becomes increasingly important as teams grow and build frequency rises.

When it comes to dependency caching, there are several strategies designed to make your development workflows faster and more efficient. Each approach serves different needs, depending on the complexity of your project and the tools you’re using.

Lockfiles, such as package-lock.json for npm or yarn.lock for Yarn, are incredibly useful for creating stable cache keys. These files store exact version details and the dependency tree, ensuring developers always install the same versions of dependencies.

For instance, GitHub Actions enables you to create cache keys that combine the operating system and a hash of the dependency file. A typical key might look like this: node-deps-ubuntu-latest-${{ hashFiles('**/package-lock.json') }}. This setup ensures the cache is updated only when dependencies actually change.

To make things easier, GitHub Actions also offers setup actions for various package managers, including npm, Yarn, pnpm, pip, Poetry, Gradle, Maven, RubyGems, Go, and .NET NuGet. These actions handle cache key generation and dependency restoration automatically, requiring minimal configuration.

The strength of lockfile-based caching lies in its accuracy. Unlike caching based on package.json, which might miss subtle version changes, this method captures the complete dependency state. This reduces the chances of build failures or unexpected issues caused by mismatched dependencies.

Now, let’s look at how caching can be tailored for projects that use multiple languages or ecosystems.

For projects that rely on multiple languages or frameworks, isolating caches by language can help avoid unnecessary invalidations. Full-stack applications, for example, often use multiple package managers. By separating caches for each dependency manager, you can optimize the caching process.

Imagine a full-stack app where npm handles frontend dependencies, pip manages Python backend services, and Maven takes care of Java microservices. Instead of creating a single cache for all dependencies, you can set up individual caches for each stack. This approach has several benefits:

Platforms like GitLab CI/CD allow you to define caches per job using the cache keyword and even support fallback cache keys. For example, a frontend job might cache node_modules, while a backend job stores Python packages separately. Similarly, Azure Pipelines offers a dedicated Cache task, with examples for tools like Bundler, Ccache, Docker, Go, Gradle, Maven, .NET/NuGet, npm, Yarn, and more. Fallback cache keys can retrieve partial caches when an exact match isn’t available, improving efficiency.

Dependency caching is essential, but you can take it further by caching build artifacts. This is particularly valuable for complex applications with lengthy compilation processes.

For example, in a React application, you might cache not only the node_modules directory but also compiled JavaScript bundles, optimized images, and generated CSS files. This saves time during subsequent builds, especially when only small parts of the codebase change.

Docker caching is another powerful option. Each instruction in a Dockerfile creates a layer, and Docker can reuse unchanged layers from previous builds. By structuring your Dockerfile to copy dependency files before the application code, you can ensure that dependency installation layers remain cached even when the code changes.

To keep things efficient, use file checksums or hashes for smart cache invalidation. This ensures that only the affected components are rebuilt, while the rest of the cached artifacts remain intact.

Shared volumes in CI/CD pipelines allow for persistent storage across builds, reducing the need to re-download dependencies from scratch every time. This is especially useful for teams with frequent builds.

Typically, CI/CD runners start with a clean environment for every build, which can slow things down. Shared volumes break this cycle by providing a persistent storage space that survives between builds. One build can populate the cache, and subsequent builds can reuse it.

However, implementing shared volumes requires careful planning. Cache isolation is crucial - different projects or branches should have separate namespaces to avoid conflicts. Additionally, proper cleanup policies are necessary to manage storage costs. Distributed caching, which shares build artifacts across multiple machines, can be particularly effective when combined with intelligent cache key strategies to maintain consistency across diverse build configurations.

Building on our earlier discussion of caching strategies, this section dives into best practices and common errors that can arise when implementing these techniques. While effective caching can significantly improve development workflows, its success depends on careful planning and execution.

Cache keys are at the heart of any reliable caching system. Including dynamic values, like dependency file checksums, in your cache key ensures you're always retrieving the correct cached data.

For instance, in a CircleCI workflow for a Node.js project, you might use:

v1-deps-{{ checksum "package-lock.json" }}

Here, the checksum of the package-lock.json file is part of the cache key, so any changes to the file automatically generate a new cache entry.

Similarly, in a GitHub Actions workflow, you could use:

${{ runner.os }}-build-${{ env.cache-name }}-${{ hashFiles('**/package-lock.json') }}

This key combines the operating system, environment variables, and a hash of the lock file, ensuring high specificity.

Fallback keys are also important. If the primary key doesn't match, a fallback key like v1-deps- can retrieve the most recent valid cache. This way, only new dependencies need to be installed, avoiding a complete rebuild.

To streamline cache key generation:

Once you've nailed down effective cache keys, the next big challenge is invalidation - ensuring outdated data is removed promptly. There are several strategies to handle this:

package.json or lock files, are updated.For complex systems, a hybrid approach combining these methods often works best. Pair this with continuous monitoring of cache efficiency and thorough integration testing to ensure everything runs smoothly.

Caching across platforms introduces unique challenges that, if overlooked, can lead to broken builds. For instance:

Permissions can also be tricky. Cache files created on Linux might not work seamlessly on other systems due to incompatible permissions. Setting proper permissions during cache creation and using containerized environments can help maintain consistency.

Additionally, environment-specific dependencies, such as native modules compiled for one architecture, may not work on another. Including architecture and OS identifiers in your cache keys can address this.

To minimize risks associated with caching, consider the following:

Hot keys - frequently accessed cache entries - can overload individual cache nodes and create bottlenecks. Distribute the load using consistent hashing and avoid relying on a single cache instance.

Structured caching can improve load times by up to 30%. Aim for cache hit ratios above 80%, and routinely review performance metrics to identify areas for improvement.

Lastly, plan for graceful degradation. If the cache fails, ensure your build process can still function, even if it’s slower. These strategies can help you implement a caching approach that balances efficiency and reliability, tailored to your project's unique needs.

This section dives into various caching strategies, examining their strengths and weaknesses. Each approach has its own benefits and challenges, which can significantly influence your development process.

To choose the right caching method for your project, it's crucial to understand how these strategies compare:

| Strategy | Build Speed | Reliability | Maintainability | Best Use Case |

|---|---|---|---|---|

| Lockfile-Based Caching | High – avoids unnecessary downloads | High – ensures consistent dependency versions | Medium – requires lockfile updates | Projects with stable dependencies and a focus on version consistency |

| Multi-Layered Caching | Very High – optimizes at multiple levels | Medium – complex invalidation coordination | Low – managing multiple cache layers adds complexity | Large applications requiring caching across client, server, and database layers |

| Shared Volume Caching | High – reuses data across build agents | High – single source of truth for cached data | High – centralized management | CI/CD pipelines with multiple runners or distributed build environments |

| Artifact Caching | Very High – stores pre-built components | Medium – requires proper versioning | Medium – managing artifact storage and updates | Projects with expensive build processes or compiled assets |

While the table offers a quick comparison, let's explore each strategy in more detail to better understand their operational trade-offs.

Caching doesn’t just save time - it can also reduce application response times by up to 70% during high traffic periods and lower database CPU usage from ~80–90% to ~40–50%. Some implementations achieve cache hit ratios of ~92%, meaning most requests are served directly from memory.

Selecting the best caching strategy depends on your project's performance needs, team size, and complexity. As caching expert Ilia Ivankin advises:

Always start by evaluating your metrics and SLAs. If caching can be avoided initially, hold off on implementing it. If a 500ms delay in your service isn't a concern, then it's not a problem. Remember that solving data synchronization issues can add complexity and maintenance overhead to your system.

Here are some factors to guide your decision:

Budget also plays a role. Simpler strategies are less resource-intensive, while distributed solutions may require additional hardware or cloud services.

Ivankin suggests taking a step-by-step approach:

If you decide to add caching, add it incrementally and monitor where it's lacking instead of immediately creating a complex solution. In practice, a simple Caffeine cache with low TTL is often sufficient for non-complex fintech or similar applications.

For projects where speed is critical from the start, consider tools like Hazelcast or Redis.

Finally, monitor and measure your chosen approach. Some organizations have cut latency by up to 40% through active monitoring. For example, an e-commerce platform reported a 20% increase in sales after optimizing its data retrieval process.

The goal is to balance performance with reliability. While caching can significantly speed up workflows and reduce costs, prioritizing reliability helps avoid issues like corrupted builds or outdated dependencies. By reusing data from previous jobs, you can make your builds faster and reduce fetch operation costs. Choose a strategy that matches your team's capabilities and project needs, then refine it based on real-world results.

Crafting an effective caching strategy for dependency management is all about customization. Your approach should reflect your team's size, infrastructure setup, performance goals, and budget constraints.

To get the most out of caching, consider adopting a multi-layered strategy that fits your app's architecture and data usage. Focus on setting the right TTL (time-to-live) values, choosing suitable eviction policies like LRU (Least Recently Used) or LFU (Least Frequently Used), and implementing accurate invalidation processes.

Keep an eye on metrics like cache hit and miss ratios, as well as latency, to refine your setup. When fine-tuning, account for factors such as how often your data changes, how frequently it's accessed, and the balance between read and write operations.

The best way to start? Keep it simple. Begin with a straightforward setup and scale up only when measurable performance improvements make the added complexity worthwhile. This step-by-step approach ensures you avoid unnecessary complications while allowing your caching strategy to grow with your project.

Propelius Technologies offers the expertise you need to turn these caching strategies into practical solutions. With a proven track record of delivering over 100 projects using technologies like React.js and Node.js, we’re well-equipped to optimize your dependency management from the ground up.

Whether you're looking for a ready-to-go solution with built-in caching or need expert developers to enhance your current setup, we tailor our services to meet your infrastructure and performance requirements.

Our 90-day MVP sprint is designed with your success in mind. By sharing the risk - offering up to a 50% discount if deadlines aren’t met - we ensure your application launches on time with a caching system that’s ready to scale as your user base grows.

With over a decade of experience in the San Francisco Bay Area startup scene, our CEO understands the importance of performance optimization for early-stage businesses. We don’t just implement caching solutions; we work closely with you to develop strategies that align with your goals, helping you avoid costly missteps while laying the groundwork for sustainable growth.

Interested in streamlining your dependency management and cutting infrastructure costs? Let’s talk about how we can help you build a faster, more efficient development workflow.

The right caching strategy for your project hinges on factors like how your data is accessed, your performance targets, and your application's overall design. Begin by identifying which data gets the most traffic and whether your workload leans toward being read-heavy, write-heavy, or a mix of both. This insight can guide you in choosing between options such as in-memory caching for lightning-fast access, distributed caching for handling larger scales, or even a hybrid approach that combines the best of both worlds.

One common approach is cache-aside (lazy caching), which offers simplicity and adaptability. Here, data is only added to the cache when it's requested, keeping things efficient. To fine-tune your setup, make sure to test and monitor various strategies. This ensures you can achieve a high cache hit ratio and tailor the system to meet your application's unique demands.

Caching in dependency management can sometimes lead to problems like cache poisoning, where harmful or corrupted dependencies are stored unintentionally, or cache staleness, which happens when outdated or insecure dependencies are used. Both scenarios can jeopardize your system's security and functionality.

Here’s how you can reduce these risks:

By following these steps, you can use caching to its full potential while keeping risks to a minimum in your dependency management workflow.

Caching plays a crucial role in speeding up CI/CD pipelines by cutting down build times. By saving and reusing dependencies, build artifacts, and intermediate results, pipelines can skip repetitive tasks, making workflows faster and more efficient.

To make the most of caching, focus on distributed caching to handle scalability, splitting caches to eliminate unnecessary dependencies, and parallelizing jobs to boost performance. Establishing clear cache policies is equally important to ensure data stays up-to-date and to avoid problems caused by stale information. Managing caches effectively helps maintain quick and reliable builds while sidestepping issues like outdated dependencies.

Need an expert team to provide digital solutions for your business?

Book A Free CallDive into a wealth of knowledge with our unique articles and resources. Stay informed about the latest trends and best practices in the tech industry.

View All articlesGet in Touch

Let's Make It Happen

Get Your Free Quote Today!

Get in Touch

Let's Make It Happen

Get Your Free Quote Today!