Tenant Data Isolation: Patterns and Anti-Patterns

Explore effective patterns and pitfalls of tenant data isolation in multi-tenant systems to enhance security and compliance.

Jul 30, 2025

Read More

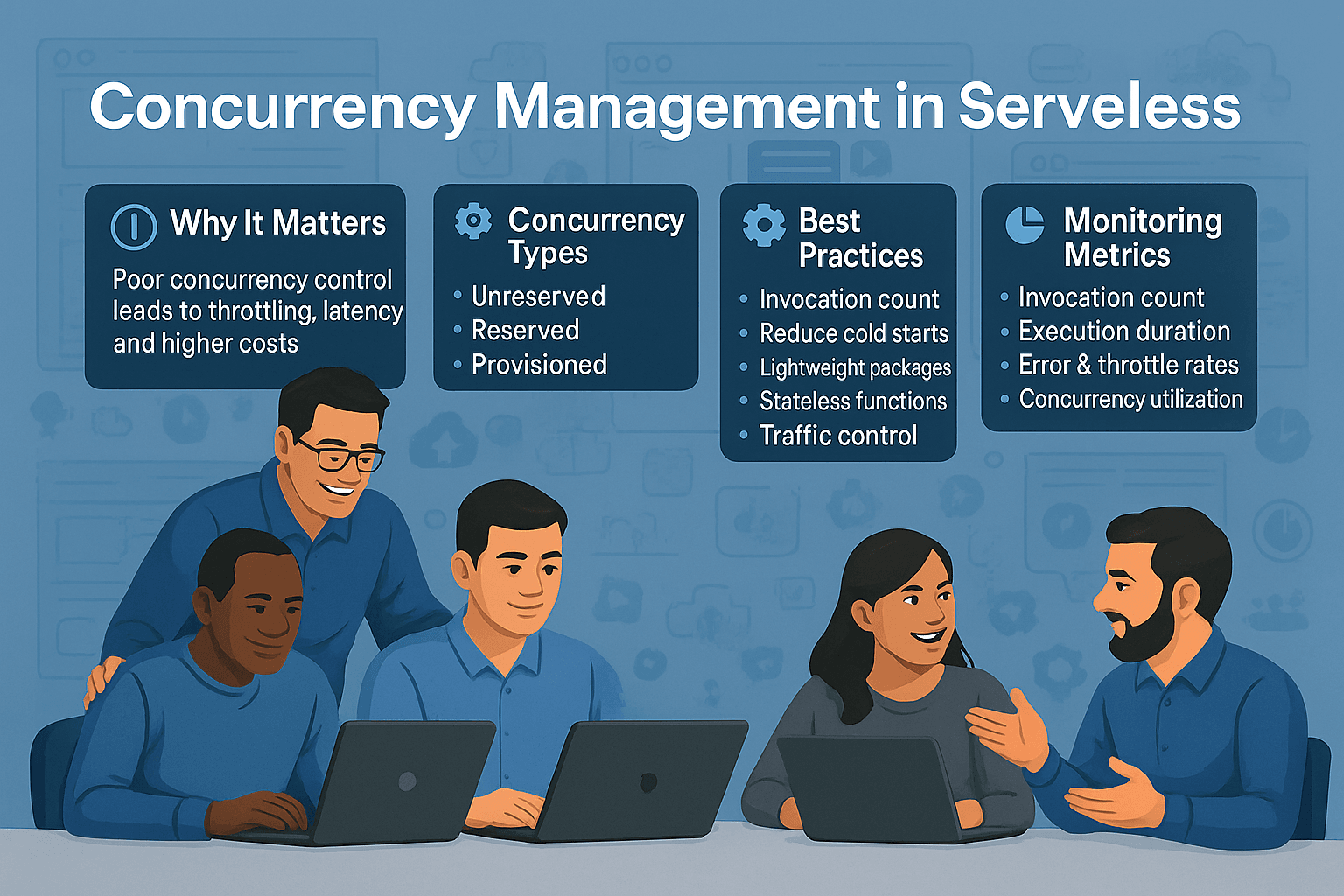

Serverless concurrency is how cloud platforms like AWS Lambda handle multiple function executions simultaneously - without you managing servers. But if concurrency isn’t managed well, you risk performance issues, higher costs, and throttled requests.

| Concurrency Type | Cost | Best For | Throttling Protection |

|---|---|---|---|

| Unreserved | Free | Low-priority tasks | Shared risk |

| Reserved | Free | Critical functions | Guaranteed capacity |

| Provisioned | Extra | High-traffic apps | Pre-warmed, no cold starts |

Next Steps: Start by reserving concurrency for important functions, monitor usage with CloudWatch, and optimize performance with caching and stateless design.

To avoid throttling and unexpected costs, it's crucial to configure your concurrency limits carefully. AWS Lambda provides three types of concurrency - each with distinct purposes. Understanding how and when to use these options can help you strike the right balance between performance and cost.

Lambda functions share a default execution pool of 1,000 units. This shared pool can lead to throttling if one function consumes too many resources, leaving others with insufficient capacity.

Reserved concurrency ensures that a specific number of execution environments are exclusively available to a particular function. This setup guarantees capacity for critical functions and also places an upper limit on their scaling potential. Interestingly, configuring reserved concurrency doesn’t incur any additional costs. According to reports, functions with reserved concurrency are up to 40% less likely to experience throttling compared to those relying solely on shared resources.

Provisioned concurrency, on the other hand, pre-initializes environments to eliminate cold starts. While this reduces latency, it does come with extra costs.

| Concurrency Type | Cost | Best Use Case | Throttling Protection |

|---|---|---|---|

| Unreserved | No additional charge | Low-traffic, non-critical functions | Shared risk across all functions |

| Reserved | No additional charge | Critical functions requiring guaranteed capacity | Dedicated protection up to the reserved limit |

| Provisioned | Additional charges apply | High-traffic, low-latency functions | Pre-warmed environments ready instantly |

Your choice depends on your application’s needs. Use reserved concurrency to guarantee resources for essential tasks or to set a scaling cap. Opt for provisioned concurrency when eliminating cold starts is worth the added expense - especially for applications where speed is non-negotiable.

Once you’ve chosen a concurrency type, the next step is to fine-tune your settings using monitoring tools.

Accurate data is the backbone of effective concurrency management. AWS CloudWatch provides key metrics like ConcurrentExecutions, ThrottledExecutions, and UnreservedConcurrentExecutions to help you determine the right concurrency levels. A simple formula can guide your calculations:

Concurrency = (average requests per second) × (average request duration in seconds).

CloudWatch alarms act as an early warning system. For example, you can set alerts to notify you if throttling occurs or if your ConcurrentExecutions approach reserved limits. Many organizations using automated monitoring tools for concurrency management have reported a 70% drop in operational overhead.

When configuring provisioned concurrency, AWS suggests adding a 10% buffer above your typical peak usage. Application Auto Scaling can further simplify this process by adjusting provisioned concurrency based on real-time utilization metrics. For instance, AWS examples show scaling policies that maintain utilization at around 70%, increasing capacity when usage exceeds this level and reducing it when it falls below 63%.

Establishing a baseline under normal operating conditions is essential. This baseline helps you make informed decisions about reserved and provisioned concurrency settings, minimizing the risks of over-provisioning or under-provisioning. Once you’ve laid this groundwork, you’ll be better prepared to implement automated scaling solutions in your serverless architecture.

When it comes to creating a responsive serverless architecture, addressing cold start delays is just as crucial as managing concurrency effectively. Cold starts occur when idle functions need to initialize their containers before handling requests, which can add up to five seconds of latency in fewer than 0.25% of invocations. While rare, these delays can be a significant issue for user-facing applications where speed is critical.

"Cold starts in AWS Lambda occur when an AWS Lambda function is invoked after not being used for an extended period, or when AWS is scaling out function instances in response to increased load." – AJ Stuyvenberg, serverless hero

To tackle cold starts, strategies like reducing package size, using provisioned concurrency, and warming functions can make a noticeable difference.

One of the simplest ways to cut down cold start times is by minimizing your function's package size. The logic is straightforward: the larger your package, the longer it takes to download and initialize. For instance, including the full Node.js AWS SDK can add 20–60ms to startup times, while a 60MB artifact can increase cold start latency by 250–450ms. For most Node.js applications, keeping your source code under 1MB is entirely doable.

Here’s how you can streamline your package:

.lambdaignore or .npmignore files.By keeping your package lean, you can shave off critical milliseconds from your cold start times.

Provisioned concurrency is another powerful tool for reducing cold start delays, particularly during predictable traffic spikes. This feature pre-initializes a set number of execution environments, ensuring they’re ready to handle requests with minimal latency - typically in the low double-digit milliseconds. It’s ideal for workloads like web apps, mobile backends, or real-time data processing.

Applications with well-defined traffic patterns, such as e-commerce platforms during sales or news sites during breaking events, can benefit significantly. With AWS Auto Scaling, you can automate the adjustment of concurrency levels based on real-time demand or set schedules to match your traffic patterns. For critical functions, analyzing invocation metrics helps you estimate the number of instances needed to maintain smooth performance during peak times.

Another way to combat cold starts is by warming your functions. This involves periodically invoking functions to keep their containers initialized and ready to handle incoming requests. Warming is particularly useful for workflows where even brief delays - like those in payment processing, user authentication, or real-time notifications - are unacceptable.

You can schedule these keep-alive invocations every 5–15 minutes using CloudWatch Events or EventBridge. The runtime language also plays a role in how effective warming strategies are. For example, Python offers much faster startup times compared to some other languages, making it a great option for cold start-sensitive functions. Scripting languages like Python and Ruby tend to perform better than compiled languages like Java or C# in this area.

If you’re working with compiled languages, consider Ahead-Of-Time (AOT) compilation, which converts your code into optimized native binaries before deployment to speed up initialization. Additionally, increasing memory allocation can provide more CPU power during startup, significantly reducing latency. Testing different memory configurations can help you strike the right balance between performance and cost.

To handle massive concurrency in serverless environments, it's critical to design functions that are stateless and rely on external services for state management.

Stateless functions are the backbone of scalable serverless systems. By avoiding reliance on internal state, these functions can scale horizontally to handle thousands of concurrent executions without risking data conflicts or corruption. Each invocation operates independently, ensuring smooth and conflict-free processing.

In practice, stateless functions simplify scaling and enhance system reliability.

By adhering to stateless principles, external services take on the responsibility of managing state, paving the way for scalable and efficient serverless applications.

To complement stateless design, external storage solutions are essential for maintaining performance and scalability. For example, using DynamoDB has been shown to reduce latency by up to 80% compared to traditional databases. Similarly, organizations leveraging Azure Cosmos DB have reported a 90% improvement in scalability options.

Caching is another effective strategy for optimizing performance. By implementing services like Redis or ElastiCache, you can reduce database load by up to 75%, significantly improving response times. This is especially useful for storing frequently accessed data, such as user sessions, configuration settings, or precomputed results.

Message queues like Amazon SQS play a key role in decoupling services and handling traffic spikes. By buffering messages, they ensure steady processing rates, reducing data loss by over 50% and enhancing system resilience.

When selecting an external storage solution, it’s important to weigh factors like latency, scalability, and pricing:

| Service | Latency | Scaling Capability | Pricing Model | Best Use Case |

|---|---|---|---|---|

| AWS DynamoDB | Single-digit milliseconds | Millions of requests/second | Pay-per-request | High-throughput applications |

| Azure Cosmos DB | Less than 10ms | Global distribution | Resource-based | Multi-region applications |

| Redis | Sub-millisecond | Horizontal clustering | Instance-based | Caching and session storage |

For session-specific data, external storage solutions like databases or caches ensure that any function instance can handle any user request. This approach guarantees true horizontal scalability. Techniques such as using Redis can reduce query times by 75%, making your serverless functions highly responsive, even under heavy traffic.

Keeping serverless systems running smoothly relies on effective monitoring and performance tuning. By gaining visibility into how your functions behave, you can prevent concurrency issues and optimize overall performance. Below, we’ll explore key metrics, alert strategies, and the role of log reviews in ensuring your serverless applications perform at their best.

Focusing on the right metrics helps you stay ahead of potential problems instead of constantly reacting to them. AWS Lambda offers several critical metrics that are essential for managing concurrency and performance.

For deeper insights, tools like Lumigo offer advanced features such as visual debugging, distributed tracing, and tools for identifying cold starts, which can impact performance.

Metrics alone aren’t enough - you need real-time alerts and dashboards to turn data into action. Dashboards can highlight critical functions and flag anomalies, making it easier to spot issues like memory overuse or application bottlenecks.

Customizable alerts are equally important. They ensure that the right information reaches the right team members at the right time. Studies show that organizations with well-designed alerting systems experience less downtime. However, be mindful of “alert fatigue,” which affects nearly 60% of IT professionals and can reduce response efficiency.

"Dashbird gives us a simple and easy-to-use tool to have peace of mind and know that all of our Serverless functions are running correctly. We are instantly aware now if there's a problem. We love the fact that we have enough information in the Slack notification itself to take appropriate action immediately and know exactly where the issue occurred." - Daniel Lang, CEO and co-founder of MangoMint

Logs provide a treasure trove of information for spotting performance delays and fine-tuning your settings. Regular log reviews and adjustments are key to ongoing optimization. Research suggests that identifying inefficiencies through log analysis can lead to significant cost savings - up to 70% in some cases.

Incorporating log metrics into your CI/CD pipeline allows you to address potential performance issues before they hit production. This proactive approach can reduce performance-related incidents by up to 30%.

To further enhance monitoring, configure CloudWatch alarms for key concurrency metrics and create custom metrics to complement default ones. Pair these with Application Auto Scaling policies that respond to both technical indicators (like CPU usage) and business needs (like transaction volume). Keep in mind that Lambda containers stay warm for about 45 minutes, which can influence your scaling strategies.

Lastly, review your log retention policies to balance storage costs with long-term operational needs. A well-thought-out approach to log management supports both scalability and efficiency.

AWS offers managed services that can help handle traffic spikes and streamline workflows. These services work well alongside monitoring and scaling strategies, providing an extra layer of control over concurrency.

AWS SQS is a message queuing service that acts as a buffer during traffic surges. By decoupling and scaling microservices, distributed systems, and serverless applications, SQS ensures your Lambda functions aren't overwhelmed with direct requests. Instead, it allows requests to be processed at a steady, manageable pace.

For scenarios requiring strict order and exactly-once processing, SQS FIFO queues are a great fit. They also support FIFO message groups, which can help limit concurrency for AWS Step Functions executions.

AWS Step Functions, on the other hand, is designed to coordinate multiple AWS services into cohesive workflows. Unlike SQS, which requires custom tracking at the application level, Step Functions automatically handles task tracking and event monitoring. It also keeps tabs on workflow states, storing data passed between steps. This ensures your application can pick up where it left off after a failure. Step Functions can even manage workflows for applications hosted outside AWS, as long as they can connect via HTTPS.

When used together, SQS and Step Functions create a powerful system for buffering traffic and orchestrating workflows, helping to prevent function overload while ensuring reliable execution.

| Service Comparison | Step Functions | SQS |

|---|---|---|

| Primary Purpose | Workflow orchestration and state management | Message queuing and decoupling |

| State Tracking | Built-in application state tracking | Requires custom implementation |

| Workflow Capabilities | Comprehensive workflow coordination | Basic workflows with limited functionality |

| Best For | Complex multi-step processes | Simple message buffering and decoupling |

Caching is another effective way to reduce system load, especially during periods of high traffic. By cutting down on redundant computations and database queries, caching can significantly boost performance. This is particularly important for serverless applications, which often need to rehydrate state with each invocation. Reducing database access for every request can lead to noticeable performance improvements.

"Caching is a proven strategy for building scalable applications." – Yan Cui

The benefits of caching are hard to ignore. Studies show that effective caching can enhance application performance by up to 80% and reduce database load by as much as 70% during peak traffic. In-memory data stores can also speed up data retrieval by up to 50% compared to traditional database queries.

"Behind every large-scale system is a sensible caching strategy." – Yan Cui

Caching not only improves scalability and performance but also helps control costs when scaling to accommodate millions of users. For serverless setups, caching can ease the load on your database, potentially eliminating the need to over-provision resources during traffic spikes.

When implementing caching, focus on data that's expensive to compute or retrieve. Define what can safely be cached, set appropriate TTL values, and establish an eviction policy that suits your access patterns and performance needs. This ensures you avoid serving stale data or disrupting strongly consistent reads.

| Cache Strategy | Description | Use Case |

|---|---|---|

| Cache-Aside | Data is retrieved from the cache when available; otherwise, it's fetched from the database and then cached | Ideal for read-heavy workloads with mostly static data |

| Write-Through | Data is written to both the cache and database simultaneously | Useful for applications needing strong consistency and faster reads |

| Write-Behind | Data is written to the cache first and later synced to the database asynchronously | Best for high write volumes that can handle slight delays |

For serverless architectures, options like Momento offer a serverless caching solution that scales on demand and charges based on usage. Unlike ElastiCache, which requires your functions to run within a VPC and incurs costs based on uptime, Momento is more flexible and cost-efficient.

Caching can also enhance data consistency in distributed systems by serving as a central repository for frequently accessed data. The most effective caching strategies can be applied at various levels, including the client side for static content, at the edge for API responses, and within application code.

Managing concurrency effectively is essential for building reliable serverless applications. With the serverless architecture market expected to hit $21.1 billion by 2025, implementing these practices is crucial for staying ahead and ensuring your systems are dependable.

"Serverless is a way to focus on business value." - Ben Kehoe, cloud robotics research scientist at iRobot

By following these strategies, you can handle traffic efficiently, manage costs, and maintain consistent performance. A thoughtful approach to concurrency management is key to optimizing serverless operations.

Here’s a quick summary of the most important practices to keep your serverless applications running smoothly:

Concurrency Configuration:

Performance Optimization:

Error Handling and Reliability:

Monitoring and Cost Control:

Architecture Design:

This checklist serves as a guide to refine and strengthen your serverless setup. Start by auditing your current configuration and prioritize reserved concurrency for your most critical functions. This step alone can protect against resource shortages without adding extra costs.

Next, analyze metrics to identify functions frequently affected by cold starts or throttling. These are prime candidates for provisioned concurrency or architectural improvements.

As you develop your concurrency strategy, consider Lambda’s capacity to scale up to 6,000 execution environments per minute. Be sure to account for the limits of your downstream systems as well.

The real key is ongoing monitoring and adjustment. As your application scales and traffic patterns shift, revisit these practices to ensure your serverless architecture remains efficient and cost-effective.

Reserved concurrency allows you to set aside a specific number of simultaneous execution environments for a Lambda function. This ensures your function always has the resources it needs to handle incoming requests, even when traffic surges.

By reserving capacity, your function avoids throttling because it doesn’t have to compete with other functions for available concurrency within your AWS account. At the same time, it caps the function at its reserved capacity, helping to manage sudden traffic spikes while maintaining steady and predictable performance.

To minimize cold start delays in AWS Lambda, try these approaches:

These steps can enhance performance and ensure a more seamless experience for users of serverless applications.

Caching with Redis can significantly boost the performance and scalability of serverless applications by keeping frequently accessed data in memory. By doing so, it cuts down on latency and eases the strain on your primary database, leading to quicker response times for users.

On top of that, Redis offers dynamic caching and effortless scaling capabilities, making it well-suited for managing sudden surges in traffic. Its lightning-fast in-memory operations, adaptable scaling features, and versatile data structures make it a go-to option for improving responsiveness and ensuring consistent performance, even under fluctuating workloads.

Need an expert team to provide digital solutions for your business?

Book A Free CallDive into a wealth of knowledge with our unique articles and resources. Stay informed about the latest trends and best practices in the tech industry.

View All articlesGet in Touch

Let's Make It Happen

Get Your Free Quote Today!

Get in Touch

Let's Make It Happen

Get Your Free Quote Today!